Next up in our “Patterns of Service-oriented Architecture” series we’ll talk about dealing with highly normalized data that spans many tables and services, or otherwise has a large object graph that reaches beyond just a simple database, by caching a denormalized version of it.

Intent

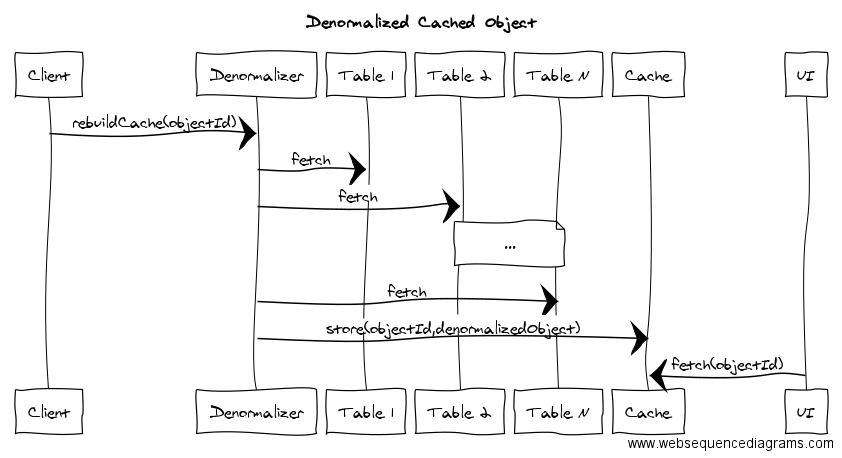

Build custom objects in a cache from querying several different tables so that the data can be provided quickly via a single fetch from the cached data store.

Motivation

An operational database that stores canonical data should be stored in a normalized schema. Such a schema is designed to prevent duplication and bad data, but it results in many interconnected tables that can be difficult to query. Although most database libraries ease the pain of querying such data, it can become quite expensive to do so.

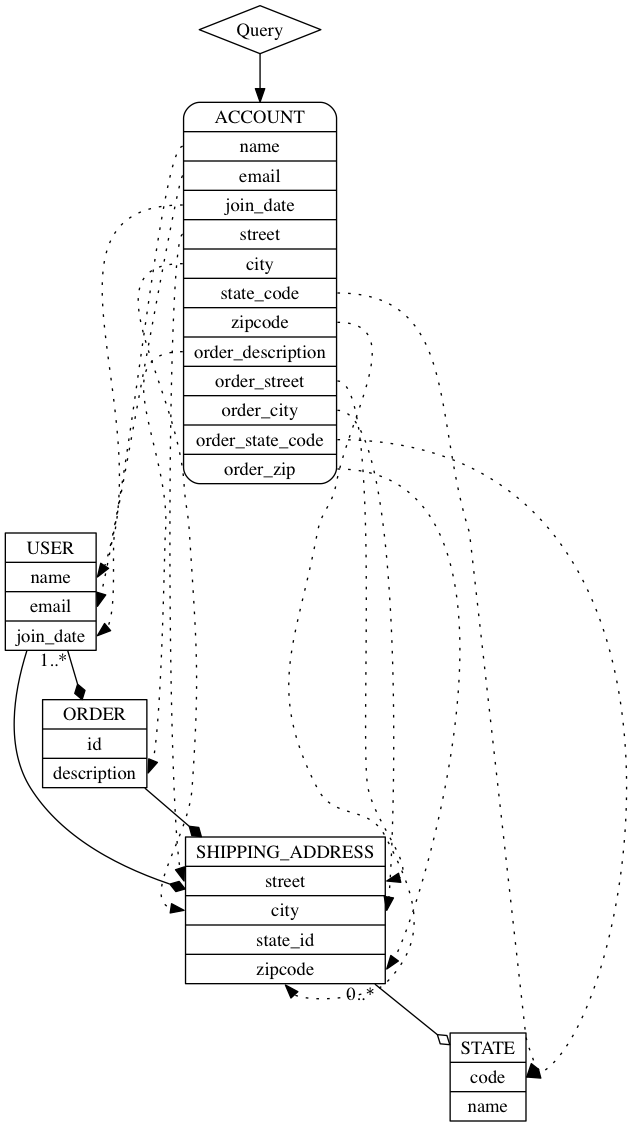

For example, suppose you have users, shipping addresses, and orders (that have addresses):

Suppose you need to create an “account” view that shows the user’s name, email, shipping address, and most recent order (along with its shipping address). That would require several queries to return that data. If you need to display this information for many users, it would require even more queries. If the data lives in a remote service (or multiple such services), the process is even more involved. At some point, this process could be too slow, resulting in timeouts.

If instead, you did the queries offline and built up a denormalized object (in this example, called Account), you could query that from a cache much more quickly:

A further motivating example and discussion is on “ElasticSearch and Denormalization in Rails”

Applicability

Use this when you are experiencing performance problems building up a complex object from a normalized, relational store. This can also be used if data is required from more than one source (e.g. a database and a remote service).

Structure

The bulk of the logic lives in a Denormalizer, which knows what data sources to use in building up the complex object.

The denormalized object should ideally be a well-defined type, like a struct or simple object, as opposed to a hash or dictionary. This results in a much clearer definition of what should be in the object, and makes changes to the denormalization process explicit through managed code changes.

As with all caching, there’s no easy to way to keep the cached object up to date. Two common strategies (which can be combined) are rebuilding when the underlying data changes, and rebuilding on a schedule.

Rebuilding on a schedule is simple, as you can walk a list of root objects and ask the Denormalizer to rebuild its cache for each object. The problem with this approach is when the data being indexed is so large that it takes too long to rebuild the entire thing.

A better approach is to rebuild specific objects when their underlying data changes. This requires the ability to know when that data changed, which is not always easy. Often, your database can tell you when data changes, but if you are using a messaging system, you can adopt a convention to send messages when shared data is changing.

In any case, the selection of underlying technology is important. You might be tempted to use a database view (or a materialized view, which caches the results of a view in an intermediate table). Those work well if your data is all inside the same data store and requires no calculation or transformation. If, however, your data comes from an external service, or is the result of some sort of transformation, you will need some code to produce the denormalized object.

Anti-Patterns and Gotchas

- Cached data is naturally out of sync with reality and can lag. You will need to take this into account when designing your features. It could be sufficient to store the date when the data was last updated and surface that to a user, but you may need to do more sophisticated things like partially rebuild the cache if a user action would change the data they are looking at. Caching drives product decisions.

- If you rebuild your cache based on events, be aware of the ordering of events. You may get events out of chronological order (depending on the type of event system and the way you process events).

- Be cognizant of the durability of your cache. If rebuilding the cache is expensive, be sure to choose a technology (and configure it) for fault-tolerance and quick recovery. For example, if your cache is simply in the memory of your webserver, you could lose that at any moment, taking out your application as it struggles to rebuild the cache. Often, your relational database can hold your cache, since it’s likely the most durable data store you have. Other common options are Elasticsearch, Redis, and memcache.

See Also

- Parameterless Job

- Command Query Responsibility Segregation