As statisticians and data scientists, we often set out to test the null hypothesis. We acquire some data, apply some statistical tests, and see what the p-value is. If we find a sufficiently-low p-value, we reject the null hypothesis, which is commonly referred to as \(H_0\).

However, it’s useful to keep in mind that since at least 1933, statisticians have been testing \(H_0\). Here at Stitch Fix, we thought it would be a good idea to leverage past work in this analysis. Instead of embarking on another statistical analysis de novo, we decided to take a step back and look at how \(H_0\) has fared in past tests, across a variety of disciplines.

Much Ado About Null

According to Google Scholar, “reject the null hypothesis” has shown up in 129,000 papers, whereas “confirm the null hypothesis” is in only 751. The naive interpretation is that \(H_0\) has been confirmed less than 0.6% as often as it has been rejected.

There are, however, some complicating factors. For instance, sometimes people write, “We cannot reject the null hypothesis”, “We fail to reject the null hypothesis”, or “It is impossible to reject the null hypothesis”, and similarly about confirming the null hypothesis. When we naively aggregate all papers that use the phrase “reject the null hypothesis”, we are inadvertently including some papers that do not actually reject \(H_0\).

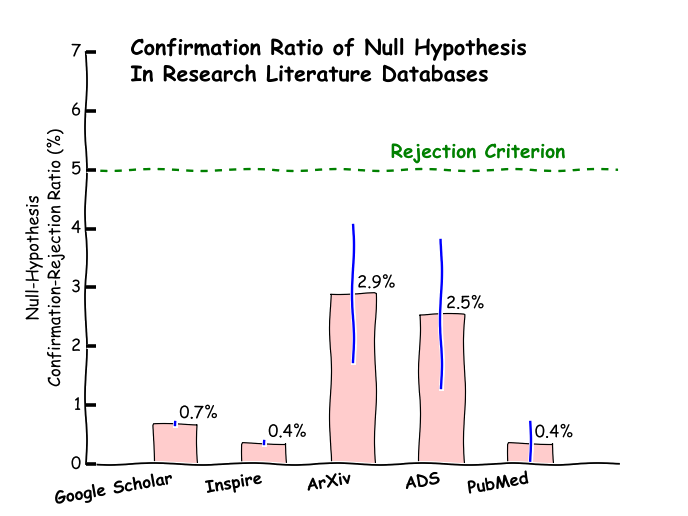

When we exclude these types of phrases, the numbers change slightly. Google Scholar finds 102,000 papers that reject the null hypothesis, and 700 papers that confirm it, or nearly 0.7% as many. Still, it seems clear that the null hypothesis is confirmed in well under 1% of published results.

Inspire is another large database containing information about published research. On Inspire, 45,000 papers have rejected the null hypothesis and 165 papers have confirmed it, or fewer than 0.4% as many.

It is also interesting to examine how \(H_0\) has performed in different disciplines.

The ArXiv preprint server hosts preprints from fields such as astrophysics, high-energy physics, and other parts of physics, mathematics, computer science, quantitative biology, quantitative finance, and statistics. Their database identifies 207 papers that reject the null hypothesis and 6 papers that confirm it. Interestingly, this corresponds to a confirmation rate of 2.8%, which is several times higher than the Google Scholar rate and an order of magnitude greater than the Inspire rate.

The SAO/NASA ADS database lists papers from the fields of astronomy and physics. Perhaps not surprisingly, the null hypothesis’s confirmation rate is similar on their listings to on ArXiv’s: 157 papers reject the null hypothesis, vs. 4 papers that confirm it, for a confirmation rate of 2.5%.

In the biomedical sciences, we used Google to search PubMed. Here, we found 277 papers that reject the null hypothesis and just 1 paper that confirms it, which implies a confirmation rate very similar to that of Inspire (about 0.36%).

All’s Well That Ends Null

Overall, from Google Scholar’s and Inspire’s databases, it appears that the null hypothesis is confirmed in roughly 0.5% of published papers. This falls well below the standard 5% p-value rejection criterion, so it seems safe to conclude that we can get off the hamster wheel and stop testing \(H_0\). It’s just false.