Title: Geometric Methods for Machine Learning and Optimization

Talk Abstract:

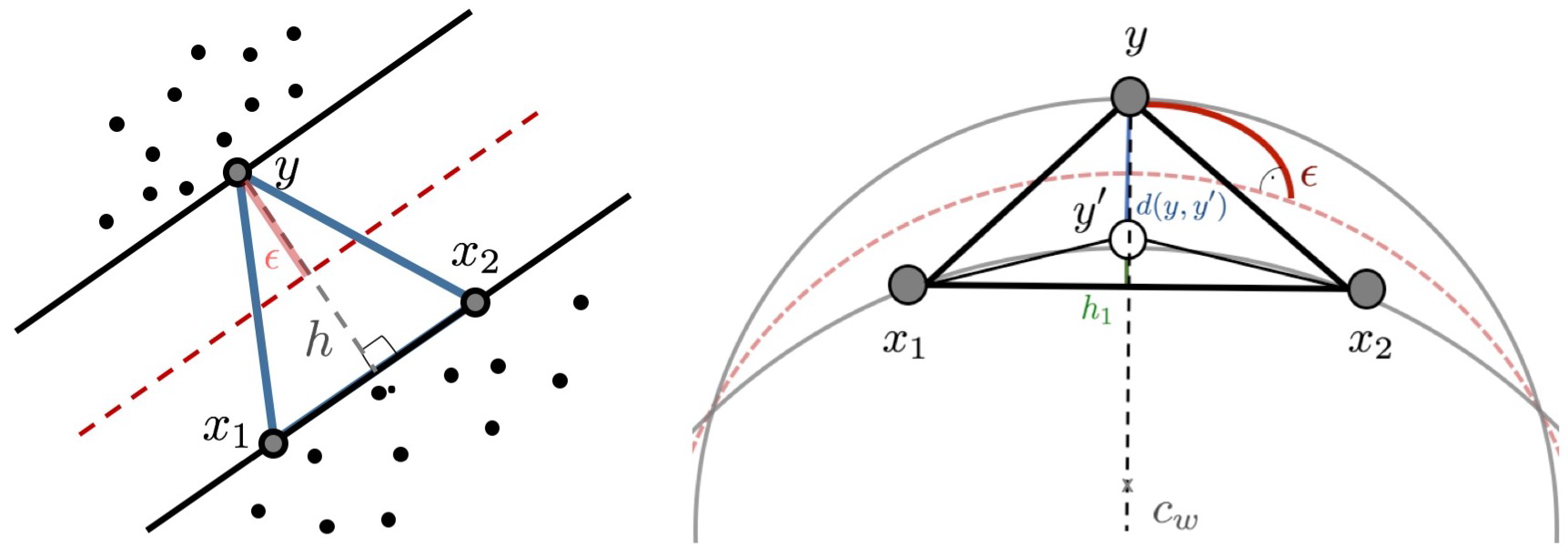

Many machine learning applications involve non-Euclidean data, such as graphs, strings or matrices. In such cases, exploiting Riemannian geometry can deliver algorithms that are computationally superior to standard (Euclidean) approaches. This has resulted in an increasing interest in Riemannian methods in the machine learning community. In this talk, I will present two lines of work that utilize Riemannian methods in machine learning. First, we consider the task of learning a robust classifier in hyperbolic space. Such spaces have received a surge of interest for representing large-scale, hierarchical data, due to the fact that they achieve better representation accuracy with fewer dimensions. We consider an adversarial approach for learning a robust large margin classifier that is provably efficient. We also discuss conditions under which such hyperbolic methods are guaranteed to outperform their Euclidean counterparts. Secondly, we introduce Riemannian Frank-Wolfe (RFW) methods for constrained optimization on manifolds. Here, we discuss matrix-valued tasks for which RFW improves on classical Euclidean approaches, including the computation of Riemannian centroids and the synchronization of data matrices.

Date and Time:

The talk will be on Tuesday, Feb 2nd at 2:00PM PST. (9:00 PM UTC)

Zoom info:

Zoom Link Passcode: 800754

Speaker Info:

Melanie is a PhD student at Princeton University, where she is advised by Charles Fefferman. Her research focuses on understanding the geometric features of data mathematically and on developing machine learning methods that utilize this knowledge. Prior to her PhD, she received undergraduate degrees in Mathematics and Physics from the University of Leipzig in Germany. She also spent time at MIT’s Laboratory for Information and Decision Systems, the Max Planck Institute for Mathematics in the Sciences and the research labs of Facebook and Google.