Title: Step sizes for SGD: Adaptivity and Convergence

Talk Abstract:

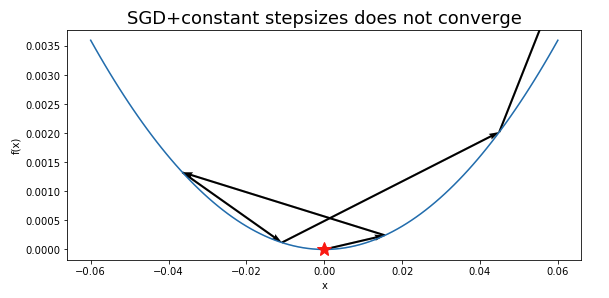

Stochastic gradient descent (SGD) has been a popular tool in training large-scale machine learning models. Yet, its performance is highly variable, depending heavily on the choice of the step sizes. This has motivated a variety of strategies for tuning the step size and research on adaptive step sizes. However, most of them lack a theoretical guarantee. In this talk, I will present a generalized AdaGrad method with adaptive step size and two heuristic step schedules for SGD: the exponential step size and cosine step size. For the first time, we provide theoretical support for them, deriving convergence guarantees and showing that these step sizes allow to automatically adapt to the level of noise of the stochastic gradients. I will also discuss their empirical performance and some related optimization methods.

Date and Time:

The talk will be on Tuesday, May 25th at 2:00PM PST. (9:00 PM UTC)

Zoom info:

Zoom Link PW: 810577

Speaker Info:

Xiaoyu Li is a Ph.D student at Boston University, where she is advised by Professor Francesco Orabona. She received her Bachelor’s Degree in Math & Applied Math from University of Science and Technology of China. She was a Ph.D candidate at Stony Brook University and worked as an intern at Nokia Bell Labs. Her primary research interests lie in stochastic optimization and theoretical machine learning. She currently works on understanding and designing optimization methods in machine learning, specifically, stochastic gradient descent and its variants, adaptive gradient methods, and momentum methods.