Motivation

Client feedback is at the heart of Stitch Fix. A core part of what powers our personalization engine is the moments of interaction and engagement with our clients – these provide rich, actionable feedback and fuel our ability to adapt to the rapidly changing client trends. At Stitch Fix, feedback comes in a variety of shapes: from Style Shuffle likes and dislikes and product ratings on style, quality, fit, size and price, to the rich, free form comments in Fix requests or reviews of items.

As our business continues to evolve, we are looking for innovative ways to act on all client feedback, no matter if it comes from a new client or someone who has been with us for years. In particular to improve our recommendations and client satisfaction, we realized that free form text feedback is especially important, yet challenging to distill for both our Stylists and Algorithms. In the case of Stylists, there can be a high volume of content to read through – imagine analyzing 100+ comments, Fix notes, and written feedback from a loyal client who has been with us for years. In the case of Algorithms, understanding human language has been a difficult task for machine learning models. However, our analysis shows that it is essential to account for written feedback in our Algorithms in order to provide the best possible personalization for our clients.

This blog post uncovers the behind-the-scenes of how we ensure we meet our clients expectations of personalization by acting on their written feedback at scale using the new advances in natural language processing (NLP), and how this solution opens doors to many other downstream applications.

Context

The recommendations from our technology are a complementary partner to our Stylists. These recommendations free them up from tedious tasks enabling them to focus on what they are extraordinarily good at—building relationships with clients, being creative, understanding the nuance and context of a client’s request. Our Stylists leverage these recommendations through a custom-built, web-based styling application. They then apply their judgment to select what they believe to be the best items for each Fix.

One of these time consuming tasks is reading through client notes and feedback. There are hundreds of millions of notes clients have sent on our platform. Clients may describe their preferences and specific needs in a Fix request, or provide feedback on a particular item their Stylist sent. Every time a Stylist prepares a Fix for a client, they are expected to read and take into consideration the content of these notes. Some of our most loyal clients submit a high volume of notes, and it can take significant time for a Stylist to acquaint themselves with the content.

We’ve already developed mechanisms to support our Stylists in absorbing this information. As Stylists review free form feedback from a client, they can highlight and save the most important information, such as what a client repeatedly mentions they really like or dislike or what a client already owns, in a structured way. Next time, when the same or a different Stylist styles this client, they can quickly reference these crucial facts ensuring the client is heard and their preferences are taken into account.

Can we do better?

While these tools are helpful for Stylists, we hoped to find a way to streamline this process and help our Algorithm absorb this information at scale, to ensure our clients are consistently being recommended products they love and align with their past feedback.

We began to focus on a way to algorithmically extract insights from text feedback from clients and harness them in the recommendation system that aids Stylists in picking the best items for our clients. The solution was two-fold – first, we extract product characteristics from the client reviews and client preferences from all the different sources of text feedback they provide. Then, we leverage the characteristics mentioned to determine what products are the most and least suitable for our clients.

Opinio1 - Extraction of product characteristics at scale

Extracting structured product characteristics from free form text feedback is no easy feat. We utilized our experts-in-the-loop – our Stylists to collect the training data. Stylists read a few hundred examples of client feedback notes and write down all product characteristics that can be extracted from them. For example:

“Super soft and stretchy. I like the pattern.” → “soft, stretchy, patterned”

Altogether, our expert Stylists generated around 10k training examples. Thanks to this work and the resulting dataset, we can train a GPT-3 model that is able to extract product characteristics from client feedback it has not seen before.

You may ask why not just use a simple approach like TF-IDF? There are multiple reasons:

- Relevancy: There are many instances where the client leaves feedback about an attribute they wish the product had. A TF-IDF approach would mistakenly assign such attributes to the product. For example, let’s say a client left the following comment on a skirt: “LOVED this skirt. The deal breaker was that it did not have pockets.” A TF-IDF approach would result in a high score for “pockets” on this product.

- Abstraction and reasoning: Sometimes the attribute is not directly mentioned in the comment and some reasoning is required to extract it.

In these cases, TF-IDF fails to extract a meaningful attribute. Here are some examples of what TF-IDF can’t do:

- “Just not my style and too fitted. I like more flowy maxi dresses and the price of this dress is way too high for what it is.” → “fitted, expensive”

- “I would have kept this piece but the fabric is see through” → “sheer”

- “This style is better for someone much older than me” → “mature”

Up until recently, such problems have been tackled by specialized algorithms and huge datasets, but thanks to new advances in NLP, outstanding results can be achieved with just a moderate amount of data. New language models, like GPT-3, are pre-trained on massive corpora to learn the syntax and semantics of the English language (and others), which has enabled us to finetune GPT-3 for our use case with our limited labeled dataset.

Tag it - Extraction of client preferences at scale

After we’ve extracted the attributes themselves, we need to extract if the client likes or dislikes these attributes from their free form text. We once again leverage our Stylists (experts-in-the-loop) to “tag” free form comments – each expert reads a sample of a few hundred client notes and writes down what product characteristics the client likes and dislikes. For example:

“It all seems a bit too business casual for me. I’m looking for something a little bit more bohemian and casual. More funky styles for Austin concerts.” → “dislikes business casual, likes bohemian, likes casual, likes funky styles”

Again, our experts generated around 10k training examples. We then use this data to finetune a GPT-3 model that is able to extract client likes and dislikes from client notes at scale.

Accounting for structured insights in the recommendation system

Now that we have extracted the specific characteristics of products each client likes and dislikes,

we can incorporate this knowledge into our algorithm, and therefore our recommendations to clients.

After an analysis, we decided to first focus on suppressing items that had attributes a client disliked

(and act on “boosting” items with positive attributes in the future stages of the project).

As you may already know from our previous blog posts (Simulacra and Selection), our Stylists have the ultimate say in what products end up in a client’s Fix. But our recommendation system helps them in picking the best products using data science by predicting what they’ll like and matches their size and budget. We algorithmically account for clients’ dislikes in our recommendations by reducing the probability of showing a product which has “negative” characteristics (what a client dislikes). This can be achieved by playing with some levers available in the recommendation system.

The advantage of having a Stylist is that human supervision can cover many edge cases. A Stylist is still able to override any of these interventions, in the case the client specifically asks for something in a note which they’ve previously indicated they’ve disliked. For example, if a client said they hate dresses, but then requests a dress for a wedding, Stylists can override this mechanism.

Before recommending a set of products for a client to a Stylist, we identify which products from the set of available products for this client should be suppressed. There are multiple ways of finding these products – in this case, we made use of a search algorithm that leverages Opinio extracted product characteristics plus the standard attributes we have for all products at Stitch Fix. For each client, we have an indicated list of their disliked product characteristics. For each of these dislikes, we perform a search query to find which products from the current available inventory match this dislike. We consecutively reduce the probability of showing those products to the Stylist as they find the best selections for the client.

For example, if a client has indicated in past notes that they don’t like “busy patterns,” the Algorithm has extracted that information on the client and we’ve incorporated that into our algorithm. When a Stylist is working on a Fix for this client, the algorithm will suppress items currently available in the inventory that are tagged with a “patterned” attribute, to assist Stylists and ensure they select an item that is NOT patterned.

Experimentation is in progress to assess the impact of these interventions on our client satisfaction.

Ideas for other use cases

As a result of our work, we generated a large data set of structured insights about our products and client preferences. Leveraging this data set to improve recommendations for our clients was of the highest priority, however there are many other applications of this data. As mentioned earlier, the advances in machine learning have opened doors to many possibilities, and if we can combine the results of this work with other cutting-edge models, we can unlock many new unforseen capabilities.

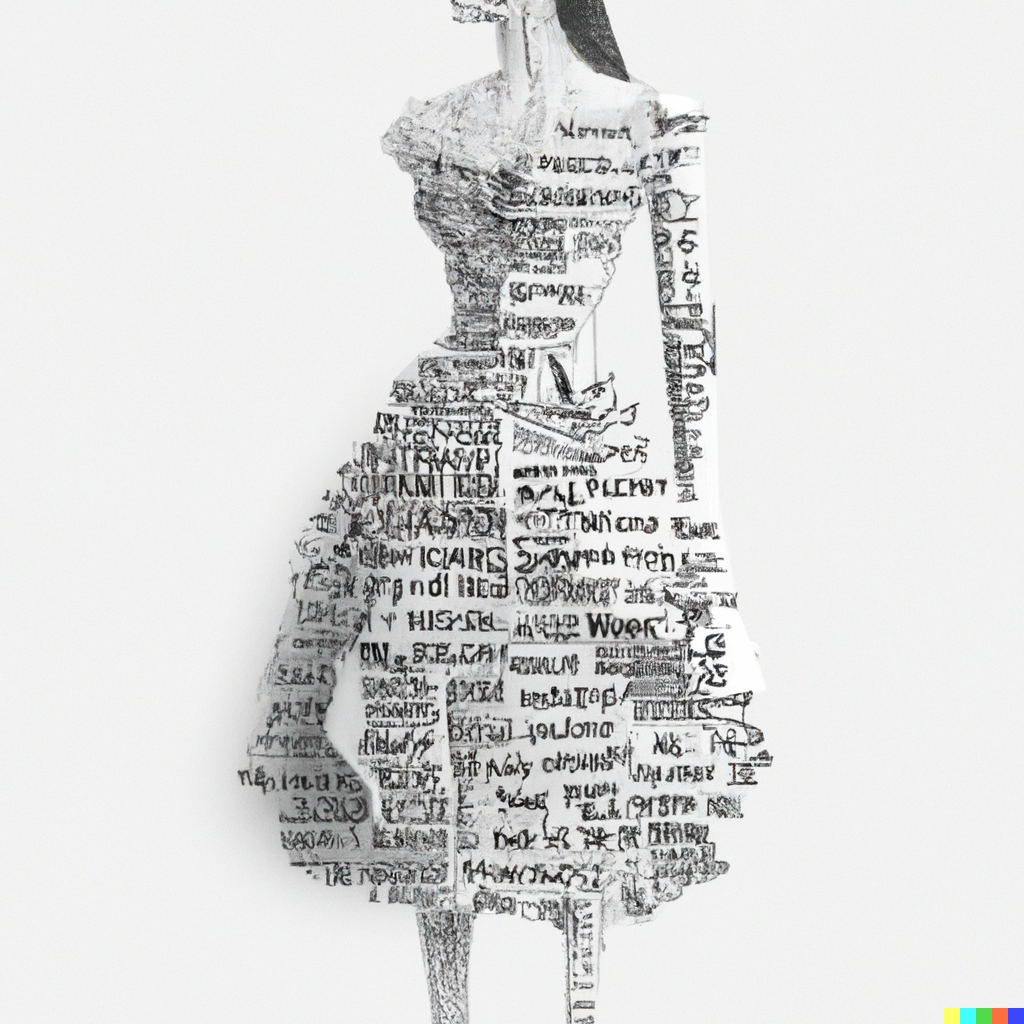

For example, we experimented with utilizing DALL-E 2 to visualize our products based on extracted structured product characteristics that have a high TF-IDF score. This way we could surface the most informative characteristics of a product in a visual way:

Opinio product characteristics: high rise, skinny, great color, red, fun color, great fit, stretchy, without distressing jeans

Image generated by DALL-E 2:

The actual product:

Opinio product characteristics: soft, olive green, great color, pockets, patterned, cute texture, long, cardigan

Image generated by DALL-E 2:

The actual product:

Moreover, Opinio product characteristics can help with improving precision and recall of search queries for our products. They can also be used to inform issues with our inventory. When tracked over time, they can be an interesting source of insights about client preferences and how they change. Finally, we could also surface them directly to either our Stylists or clients to help them understand in more detail what the qualities of each product are and bring more confidence to their purchase or recommendation decisions.

This is one of the many ways we incorporate data into our decision making and recommendations. We’re continuing to build on these efforts and find new ways to utilize our clients’ feedback for long term trust and satisfaction in our recommendations.

Footnotes

[1]↩The name was generated by us using GPT-3.