This is the first installment in the “Patterns of Service-oriented Architecture” series of posts, and we’ll start off with a widely-applicable pattern called Asynchronous Transaction. It’s a simple pattern to use when your service must perform long-running tasks before giving a definitive result to its consumer.

Intent

Use when a long-running operation must be performed, but the caller needs to know when it’s complete or what the result of the operation was. For example, performing a credit-card charge.

Motivation

Some operations are either lengthy or take an unpredictable amount of time. Often, these are operations performed by a third party. We naturally want to wrap that third party in a service, which means the service will not be any more performant than the third party (or long-running operation).

To combat this, the caller initiates a request for work to be done and is given a reference ID back to refer to the work, or transaction, later. The caller can then ask the service the status of this transaction using the handle. The caller could also be notified via messaging.

For example, we wish to generate shipping labels, however this requires calling out to FedEx, parsing the response, and saving the label’s image somewhere accessible. The caller will provide information about the label they want, and the shipping-labels service will do a basic examination of the request for validity. It will then return a reference ID to the caller. It then initiates a Background Job to do the work of generating the label.

The caller can then use the request ID it was given to ask for an update, often by polling. When the Background Job completes, the shipping-labels service updates its internal state to indicate the request has completed. The next time the caller asks about the request, the service indicates it’s completed and provides the label to the caller.

Choosing the right polling interval can be tricky. If you choose one that’s too short, you might overwhelm the service (though the service can mitigate this by supporting conditional gets). If you choose a longer polling interval, the consumer will wait longer to get results, for example if you poll once per second, but the operation takes only 200ms, the consumer is wasting 800ms waiting.

You’ll have to fine-tune this for your use-case, but you can also use messaging (see alternate flow below) to be notified the instant your work is done, and avoid polling altogether.

Applicability

Use this as your service’s API if:

- You are wrapping any third-party API.

- Operations will be lengthy.

- You cannot guarantee the performance characteristics of the operation, for example if the 95th percentile response time is typically slow, even if the median is quite fast.

Structure

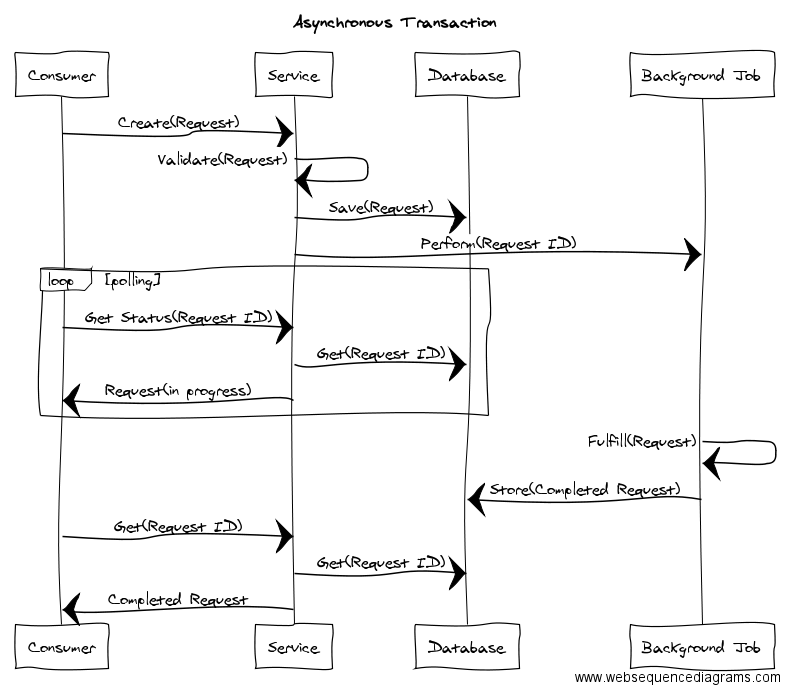

This pattern is implemented like so:

In words:

- Consumer initiates a request for work to be done.

- Service validates the request for basic correctness and writes it to the database.

- Service queues a Background Job to fulfill the request.

- Service returns a request ID to the Consumer.

- Consumer polls the Service to see if the Request with the given ID is completed.

- The Service could return “in progress” while the request is being fulfilled.

- When the request is fulfilled by the Background Job, it updates the state in the database.

- The next time the Consumer checks, the Service has fulfilled the request and returns the completed results.

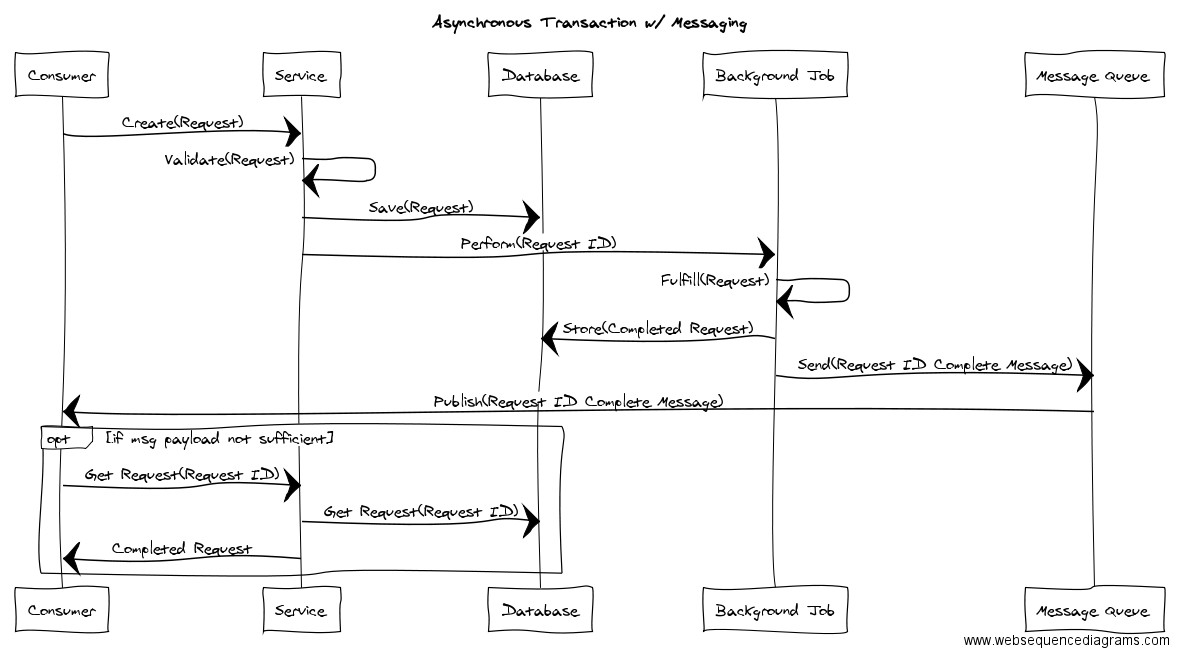

Alternate Structure - Messaging

If you have a messaging system set up, such as RabbitMQ, you can eliminate the need for polling by sending a message on the message bus when the original request is complete. The payload could be the entire completed request, or just the ID (in which case, the Consumer can request the full details from the Service).

If you do have a messaging system set up, this structure is more fault-tolerant and manageable as you will be reactive to changes, rather than polling for them. The downside is that you may need monitoring on transactions you’ve initiated that never complete.

Anti-Patterns/Gotchas

Since this pattern is used when the consumer needs the results of a potentially slow operation, it’s possible for the operation to take far longer than it needs to if the Background Jobs aren’t managed properly. A simple example would be an operation that takes 5 seconds to complete. If 20 requests are made at the same time, but only one Background Job is being processed at a time, it means that at least one request will take 5 × 20 = 100 seconds to complete!

As such, you’ll need to make sure your capacity to process Background Jobs is sufficient to meet whatever SLA you want your Service to deliver to Consumers.