“I want it all, I want it all, I want it all, and I want it now” – Freddie Mercury (Queen).

In their mid-80’s rock song I Want It All, it seems like Queen did not want to acknowledge the reality that life is all about tradeoffs. There is no escaping this reality in business settings. Most businesses face some form of tradeoff, which arises when there are competing objectives. A classic example is growth (customer base or revenue) versus profitability. But tradeoffs are pervasive in many other business aspects as well [1] . For example, a car manufacturer wants to minimize production costs while maximizing comfort. A restaurant owner wants to maximize the number of patrons they can seat, while minimizing meal wait times. Even if a company is aware that some trade-offs are being made - and it is often only a fuzzy intuition of the relationship between such objectives - quantifying that trade-off is not usually attempted.

Like other companies, Stitch Fix has multiple objectives, some of which can compete with each other (in the sense that improving one comes at the cost of the other). When designing experiences to show a client on a prominent section of the app/website, we need to be aware of how each choice affects the client. And ultimately, we need to understand how these choices impact different business metrics. How do we decide what to optimize for, when we care about multiple, often conflicting, metrics? How do we direct clients’ valuable (and finite) attention?

In this post we will be describing a method to make trade-offs explicit, and show how that empowers decision making for the business. First we’ll introduce the concept of a Pareto frontier. Then we’ll discuss the method: a multi-objective multi-arm bandit (MAB) system that allows us to quantify our trade-offs when faced with competing objectives. Finally, we will discuss ways in which competing objectives can be prioritized.

To make it relatable, and easy to follow along, we’re going to anchor the rest of this post in another 80’s classic, the Ms. Pac-Man video game! At this point, if you get the feeling that we really like the 80’s, you’re not wrong :).

Multiple objectives and pareto optimality: Capturing important tradeoffs

Let’s suppose you’re in the business of designing the Ms. Pac-Man video game franchise. For the games to be a hit, you need players to enjoy them, which ultimately leads to sales. In other words

\[\textrm{video game sales} = f(\textrm{game enjoyment})\]Enjoyment is a complex phenomenon, and depends on many aspects of the game design, and also on a gamer’s particular playing style. However, broad characteristics of a game that make it enjoyable are things like (1) playability and (2) skill differentiation. A game which is playable is usable by a wide range of people. A game that rewards superior tactics differentiates gamers’ skills. The impact of game design on these enjoyment characteristics is an active area of research [2] , [3]. If a game is severely lacking in one or both of these aspects, it could lead to its quick demise1. The tradeoff therefore can manifest when designing the game to be broadly playable, but also make it challenging to the motivated gamers.

When developing a Ms. Pac-Man game, let’s assume you capture proxy metrics that are known to correlate well with key business goals. Daily active player sessions can be a proxy for playability, with more sessions indicating more playability. Game score variance can be a proxy for skill differentiation. The larger the variance, the more players’ gaming skills are differentiated.

The goal of your game design strategy is to maximize both objectives: playability P, and skill differentiation S, as this leads to a more enjoyable game. In other words, we have a multi-objective optimization problem. So far so good, but in order to do this, you’re faced with two design considerations (in reality, the design factors of the original Ms. Pac-Man were many! [4] ):

(design consideration 1) the ghost chase strategy: the ghost chase strategy determines how Inky, Blinky, Pinky and Clyde chase Ms. Pac-man. This includes things like chase tactics, speed, etc. Denote this choice vector by \(x_G\).

(design consideration 2) the energizer pellet effect: pellets give Ms. Pacman temporary immunity and allow the ghosts to be chased and eaten for a limited time. This effect determines things like the ghost scatter tactics, how long they are frightened before resuming their chase, etc. Denote this choice vector by \(x_P\).

A design choice is therefore a pair of vectors (\(x_G\) , \(x_P\)). And the Ms. Pac-Man design problem boils down to:

\[max ( S(x_G ,x_P), P(x_G ,x_P) )\]subject to some constraints.

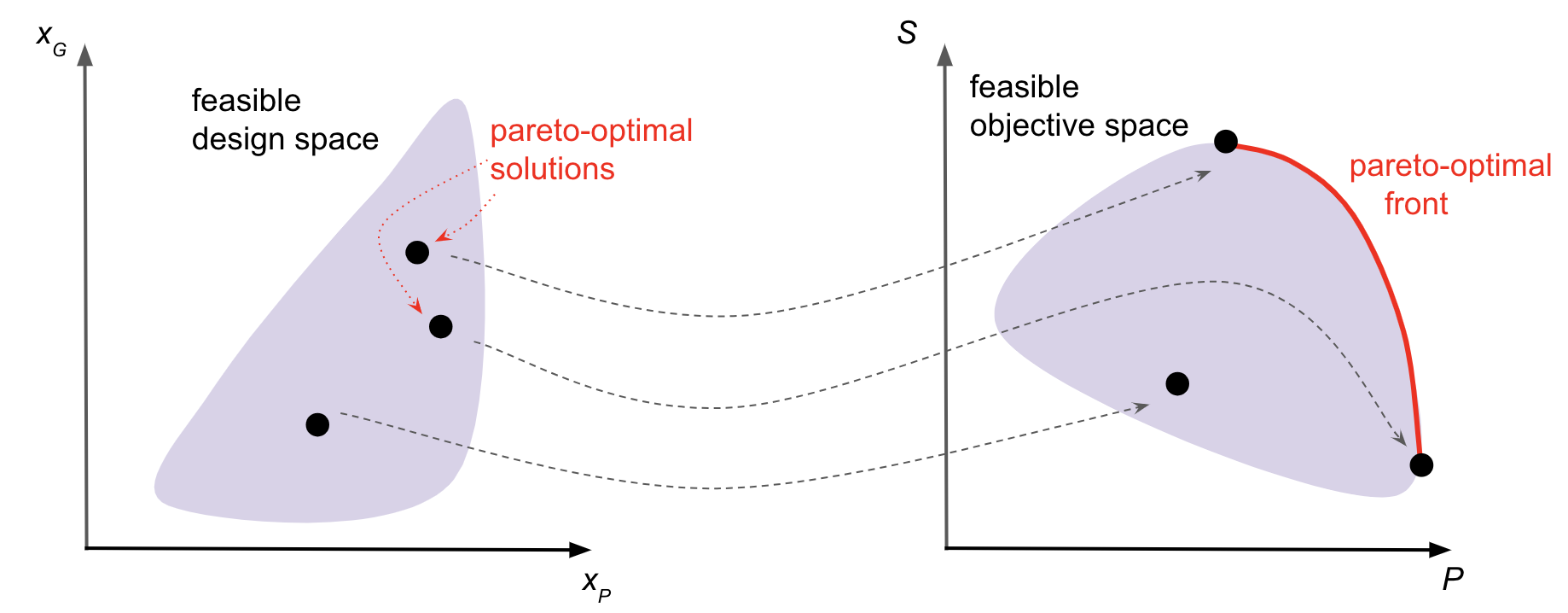

In optimization problems with a single objective, one solution is demonstrably better than another solution by comparing both values of the objective function. In multi-objective optimization problems, the “goodness” of a solution is a little more nuanced. A feasible design solution (\(x’_G\) , \(x’_P\)) will have one of the following cases apply to it:

- Dominated in all objectives → other solutions have better S and better P values

- Dominating in all objectives → no other solutions have better S or better P values

- Conflicting objectives → other solutions are better on S but worse on P, or vice versa

Solutions that are either dominating or conflicting (i.e. B or C) are non-dominated. By going through this exercise with all feasible solutions, one ends up with the Pareto optimal design set, which is defined as the set of non-dominated solutions. These solutions trace a Pareto frontier in the objective-function space. The frontier shows the tradeoffs, between playability and skill differentiation, due to different game design choices.

Multi-objective multi-arm bandits

To be able to make informed design decisions about ghost chase strategies, and power pellet effects, we need data! A multi-armed bandit (MAB) allows efficiency in understanding, and exploiting, the impact of design choices on playability and skill differentiation outcomes. By continuously assigning players at random to various arms (design choices), and then exploiting the winning strategy, we are in good shape to test and iterate2.

Once we have the data, we have to approach the multi-objective optimization problem differently than a standard MAB [5]. This is because of the property mentioned earlier regarding “goodness” of a solution. In a well-defined standard MAB, there is usually no ambiguity about optimal solutions. In a multi-objective MAB, there is a tradeoff at the Pareto frontier between objectives. Something or someone has to decide the relative importance of the objectives. As the producer of Ms. Pac-Man franchise, how much should you care about playability compared to skill differentiation of the game?

A common method to help make this decision is to scalarize, converting the multi-objective optimization problem into a more familiar single objective problem. The simplest approach is linear scalarization (this has limitations, which more complex methods try to overcome [5]). This approach defines the single objective as a weighted sum of the multiple objectives. The original Pac-Man design problem now becomes:

\[max( λ_S·S(x_G ,x_P) + λ_P·P(x_G ,x_P) )\]subject to some constraints and \(λ_S , λ_P > 0\) and \(λ_S + λ_P = 1\).

How do we set these weights? We will get to that in the next part, but for now assume they’re pre-determined.

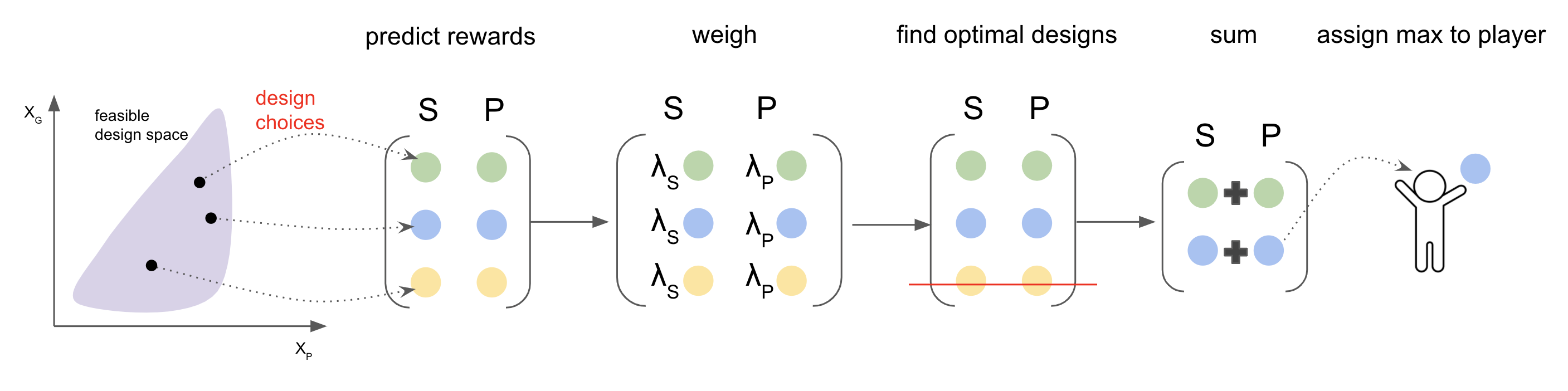

We mentioned earlier that playability and skill differentiation can also depend on player characteristics. This potentially important information can be taken into account by extending the MAB to be contextual. The overall approach looks like this:

- Suppose you’re considering K design choices of Ms. Pac-Man (i.e. different ghost chase strategies and energizer pellet effects)

- From the randomly collected data in the explore phase of the MAB, train models to predict the objectives (P, S) for each design \(i = 1, ..., K\).

- For a new round of data, predict the set of K objectives (P, S) for each player and weight each objective given a fixed set of weights.

- Select the subset \(A^*\) of design choices (arms) that are Pareto-optimal, meaning they are non-dominated in the objective space3. at this point, any design choice from \(A^*\) is just “as good” as any other, from the point of view the playability (P) and skill differentiation (S) objectives.

- Finally, for each optimal design choice, compute the scalarization function and select the choice in \(A^*\) that maximizes the scalarization function. This gives you the best design choice for your pre-determined weights4.

Choosing your weights

We mentioned in the previous section that we scalarize to convert our multi-objective problem to a single objective function problem. The question remains, how should we choose the weights? The most uninformed decision is just to set equal weights. Another possibility is to rank the objectives according to some external criteria. For example, there might be belief from the marketing team that playability is more important than skill differentiation. Once the decision-makers provide a ranking, we need to translate the ranking into weights. One such method to do this is using the rank order centroid [6]. This method assigns weights to M ranked items where the i-th item gets a weight

\[λ_i = \frac{1}{M} \sum_{k=i}^M(\frac{1}{k}).\]In the case where playability is the more important objective, the assigned weights could then be \(λ_P = 0.75\) and \(λ_S = 0.25\) for example.

Finally, it’s possible that the decision-makers are ambivalent about the ranking of objectives, but would like to understand the impact of different ranking choices on business outcomes. If the relative importance of playability and skill differentiation reversed, what would the impact be on game enjoyment? And how would that ultimately affect business metrics like game sales? In a scenario like this, you could run user-level A/B tests with both weight combinations simultaneously (\(λ_P = 0.75\), \(λ_S = 0.25\) and \(λ_P = 0.25\), \(λ_S = 0.75\)). If there were more than two objectives, the ranking possibilities grow pretty quickly, and it could be challenging to answer all the combinations efficiently.

Conclusion

The framework outlined above, illustrated using the development of Ms. Pac-Man, is extensible to any business setting that has multiple objectives, and the ability to test and measure. Multiple objectives come hand in hand with tradeoffs, and the best thing data scientists can do is to make the tradeoffs visible to the business. A critical piece of this approach is the involvement of the decision makers. Afterall, priorities are constantly changing as strategies shift. At Stitch Fix, our algorithmic systems clarify these tradeoffs, and our product and finance partners are active participants in navigating them. We believe that’s how you go from just playing the game, to winning the game!

References

- Stewart et al: Real-World Applications of Multiobjective Optimization↩

- Togelius et al: Multiobjective Exploration of the StarCraft Map Space↩

- J.H Kim and R. Wu: Leveraging Machine Learning for Game Development↩

- Kirbykid: Pac-Man Design: Variables of Difficulty↩

- M. Drugan and A. Nowe : Designing multi-objective multi-armed bandit algorithms: a study↩

- M. Danielson and L. Ekenberg: Trade-Offs for Ordinal Ranking Methods in Multi-criteria Decisions↩